The ghostly black and white image contrasted with the flight data superimposed upon it. Airspeed, altitude, magnetic heading—even the current attitude and flight path were clearly presented as I saw through them to the scene outside. The computer-generated synthetic terrain on my primary display agreed nicely with what I saw on the camera scene, despite the fact that the night was moonless and the city lights far away. The dark valley floor stood out from the much lighter tree tops, and it was easy to see my target as I manipulated the controls and locked on to the approach path—this would all come together nicely in the next few seconds. …

Afghanistan? Iraq? Some Central American interdiction mission? We’ve all seen these images on evening news shows or YouTube videos—the stabilized imagine suddenly lighting up with the explosion of a laser-guided missile or bomb. But not this time—this was a peaceful, civilian operation—in fact, it was a personal mission—landing my homebuilt airplane at a municipal runway at night in the mountains of Southern California. What was new was the FLIR pod hanging under our right wing, transmitting a clear picture of what was ahead through the magic of infrared imaging, the same technology used by the military and law enforcement communities to see in the dark. The end goal is the same—to keep everyone safe by clearing away the darkness and letting us see what is hiding in the shadows.

Sitting on the run-up pad, it is already apparent that the FLIR (bottom) shows much more detail than the synthetic vision (top).

Grand Rapids Technologies (GRT) has been a leader in EFIS development for the Experimental community since the technology began. Beginning with their ubiquitous Engine Information System (installed in thousands of aircraft), which provides engine data to the pilot, they came to the market with a full EFIS system in 2004. Development has continued at a steady pace with the addition of synthetic vision (SV), faster processors, better displays, and improved pilot interfaces. One of the benefits of their improved hardware has been the ability to display video directly on the screen, and to overlay that video with flight data. One obvious use for a video input was the addition of a Forward Looking Infrared (FLIR) camera to help pilots see in the dark.

When I was asked if we might like to give the system a try, my first thought was sure, that sounds really interesting. The truth is, I get excited about trying out any new technology, so long as it doesn’t involve explosives; but eagerness to try new things doesn’t necessarily mean that those things will prove useful. My next thought was OK, so FLIR sounds cool and high tech, but how useful is it, really? FLIR helps you see in the dark—and it does a remarkable job of that. But heck, if it’s dark, you should only be flying to places with lights, right? And when it comes to seeing through clouds, we already have SV to keep us out of terrain. If you are in the clouds, you should be IFR, and if you follow the rules and procedures, they keep you out of the terrain as well, don’t they? So why do I need a FLIR camera; won’t it just be redundant?

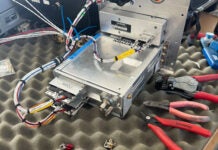

The complete FLIR kit consists of the camera, housing, video adapter and necessary wiring. (The inspection cover is customer supplied.)

Well sure, these are all valid points. But then I began to wonder what can the FLIR do that the SV can’t. Well, first, and most obvious, is the fact that SV can only show you terrain—and that terrain is as old as the database from which it was generated. But SV can’t show you things like runway detail; the FLIR will show you taxiway striping. SV also can’t show you what is happening right now—like the dog or coyote running across the runway in the dark. FLIR can show you those things—as it can show you the local high school kids drag racing on the deserted runway in the middle of the night. The FLIR can also be useful if you experience one of those ultimate terrible moments—an engine failure at night. The camera clearly shows things like clearings, fields and roads, easily picking them out from the forests, mountains and rocks that would lead to an unfriendly arrival for any aircraft. The FLIR takes the mystery from the night and this can be a huge margin-builder for those who like to (or need to) operate when the sun isn’t shining.

Installation

The installation was remarkably simple. The FLIR pod is a beautiful aerodynamic sculpture of carbon graphite composite. The pod is made by Bonehead Composites for GRT to house a camera sourced from FLIR Systems, Inc., and mounts quite easily underneath the wing to a typical inspection cover. I made an extra inspection cover for the testing, since I knew I’d need to cut spaces for wiring and drill mounting holes, and I didn’t want to ruin the original. This took about 20 minutes and a scrap of 0.025-inch aluminum that I had lying around. The camera interface is simple—a shielded RCA composite video cable, power, and ground are all that it requires. I ran these wires through a snakeskin and routed them through the wing into the fuselage—then up behind the panel. A small video adapter unit (provided by GRT) takes the composite video and converts it magically into something that the GRT Hx display unit can use via the USB port. I connected the power to the main buss through a spare 2-amp breaker, and the ground to the airframe—and it was ready to power up.

The author mounted the camera under the left wing of his RV-8 and noticed no appreciable drag from the installation.

I had never used the video capability of the GRT screens before, but configuration turned out to be simple. Selecting the primary flight display on the EFIS screen, I simply scrolled through the menu until I found “Video,” and toggled it from “Off” to “OVR” (Overlay). This brought the ghostly image to the screen, with the data displayed above it. Hmmm…vertical black and white lines. I wonder…oh, that’s the corrugated metal of the hangar door. OK, so we know it works, but after opening the door, something was funny…oh, the world was upside down and backwards. I went into the setup pages for the display unit, and sure enough, the video options allowed me to rotate the view 180 degrees, and all was right with the world. Installation was complete, ready for flight testing.

One of the questions I had in the back of my mind was answered right away: How does the FLIR behave in daylight? I had used image intensification cameras before, and they are simply overloaded by too much light because they are amplifying the visible spectrum. But the FLIR is not looking at the visible spectrum; it is looking at heat signatures, and right off the bat, I could tell that it really didn’t care if it was day or night. There is a difference of course—objects that are heating up due to sunlight shining upon them will be brighter during the day than at night. But overall, I was surprised and pleased at how useful the image was during the day. This bodes well for low-visibility, daytime operation, such as an instrument approach to minimums.

An inflight picture over the mountains shows the view available in the FLIR image and how well it coincides with the synthetic image.

As I fired up the airplane and prepared to taxi, I got my next surprise—the details were outstanding. I was able to pick out the pavement markings on the taxiways quite clearly; it would be easy to taxi without looking outside at all. You could see the lines on the pavement, you could see hangars and other buildings, and you could see people. Getting to the runway with a hood on would be a piece of cake; and taxiing in poor visibility would be much safer. The image was very stable; there was no hint of airplane vibration, and the pod structure was quite rigid, so there was no relative motion with the airframe. Other aircraft were rendered in great detail, and I could even read N-numbers as they taxied by. It was no problem at all positioning myself on the run-up pad, purely with reference to the FLIR, with another aircraft in front of me. (OK, I certainly did look out the window to make sure.)

The airport at Big Bear Lake in California is up in a mountain valley, and the runway aligns with the valley floor. There are mountain ridges on each side, so that when you are pointed perpendicular to the runway, you are looking at the hills—and in fact, that is exactly what I could see on the FLIR screen—hills with trees on them. Once I lined up for takeoff, I could see the valley and sky, with the ridges on each side. I once again had no doubt that I could have operated with no actual view outside in relative safety; I could see obstacles, including moving obstacles, and it was just as if I was peering out an EFIS-sized window at the (black and white) world.

Looking from the mountains to the desert. Dark regions are cold, light regions are warm, and a first-time FLIR pilot needs to learn how to interpret the views. In this case, the light images in the mountains are trees, the dark spots barren rock.

Flying with FLIR

One of the things I was curious about was how the pod would affect the aerodynamics of the RV-8. As it turned out, neither myself nor my wife (when she was flying) could notice a measurable change in the amount of rudder required for climb or straight and level flight, and the airplane’s speed seemed unaffected. This tells me that GRT has done a good job of designing the pod fairing for low drag—something that performance-oriented builders and pilots will appreciate. For those who remember radar pods for singles back in the 1980s, this is a whole different animal—quite compact and easy to miss on a low-wing airplane unless you are looking for it. You can put those worries to rest—at least at RV speeds.

As we exited the airport traffic area, I began to learn how to truly interpret FLIR images. It takes a little bit of thinking to understand that the world looks different in hot and cold terms. Dark is cold, and light is hot—not something we are used to interpreting. So a lake, for instance, is cold, and shows up as a large flat dark area. Trees are warm because they are absorbing and radiating heat from the sun, so they are bright. A large, plowed farm field in the sun is well known by pilots to generate thermals—and of course, that means it is hot, which means that it is bright. So a dark flat area could be a nice place to land if it is a field, or a horrible place to land if it is water, and you don’t have floats.

Context and shape will tell you the difference, so it requires a little thinking. I noticed a nice straight area that looked dark, with a bright line alongside it—sure looked a bit like a road or runway to me. But when I looked out the windshield, I realized that it was a row of trees casting a shadow beside it—the trees were bright, the shadow dark. It wasn’t a good runway; it was just a farm-country wind break. I am sure that with just a little observation, FLIR interpretation will become second nature for most—but it is interesting to note the differences.

Looking farther away on this daylight flight, I was impressed at how well I could see mountains in the distance—farther than the 35-mile range of the SV terrain. I could also see down into the Los Angeles basin, and it was quite obvious where the drop began from the high country of the San Bernardino Mountains. The canyon that stretches down from the Big Bear Lake dam was very apparent, and would have been easy to follow using the FLIR alone. It turned out to be just as obvious on the night flight that followed a few hours later, and that is where the camera really showed its stuff.

The night flight began just after sunset; there was still a golden glow on the western horizon out toward the Pacific, but the lights of the town effectively rendered the real terrain out the window dark and invisible. I have flown in and out of the valley many times with synthetic vision on the GRT EFIS, and am very comfortable with its ability to accurately show where the hills are—but I was not sure what to expect from the FLIR. Once again, the FLIR made night into day—it was so much more natural than even the textured SV—and because of the detail, it inspired confidence in its accuracy.

We launched into the night, headed west out of Big Bear, climbing to 10,000 feet to make sure we were above the terrain before doing some maneuvering. While outside we had inky darkness, on the FLIR screen we could make out ski areas, highways, and Lake Arrowhead. The canyons down to San Bernardino were obvious, as was the fall-off to the high desert of the Antelope Valley to the north. Turning the airplane around, we saw the irregular shape of Big Bear Lake reflected accurately on both the FLIR and the SV. The black and white real image coincided perfectly with the artificial picture generated by the terrain database. I actually thought who needs the real world view when you can simulate it so well?

With the FLIR providing such good visual information in cruising flight, the final question was how well would it serve us on approach? We set up for a standard VFR pattern to Runway 26, entering an extended left downwind in the valley next to the ski areas. Despite the darkness, we could see the cabins and houses of Big Bear City, along with the ski areas and schools—all on the FLIR. There is a flat, narrow valley floor extending toward the east, and that showed up as a dark area in the distance. As we turned base, Baldwin Lake, a dry lakebed to the east northeast of the runway was obvious—and if we’d had an engine failure at that point, it would have been a good place to land. It stood out much more clearly as a flat, inviting place on the FLIR than it did on the SV display. As we turned final, the runway environment was obvious by all three means of visualization—the FLIR, synthetic vision and real world view. The dark night with runway lights that we could see out the window was almost the least inviting of the three. Synthetic vision showed the runway on the brown terrain, and the FLIR showed the runway, the parallel taxiways, the run-up pad, the hangars, ramp lighting—it was hardly a challenge to line up and shoot the approach, just as if it were daylight.

This image shows Big Bear Lake nestled in the San Bernardino Mountains. Both the synthetic vision and FLIR show the lake and mountains, but the FLIR does so with more detail.

The Case for FLIR

After comparing the view outside to synthetic vision and the FLIR, it is obvious to me that while nothing beats the real view in daylight, the FLIR has some advantages over synthetic terrain in the dark. First, the detail is remarkably good, although the interpretation will take a little getting used to. This detail is most obvious in the small scale, when you are using things like taxiways alongside the runway to give you a better sense of depth. Second, the fact that the FLIR can show you a truck or airplane stranded on a little-used runway is a huge plus if you are operating at night. However, you do have to weigh these benefits against cost, and ask yourself about the kind of flying that you really do. If you operate under IFR most of the time, obey all of the minimum altitudes and stay on course, it can be argued that synthetic vision isn’t needed at all—terrain and obstacle clearance is assured by the rules of the game. But both SV and FLIR will increase your situational awareness under these conditions, and it should be noted that IFR flights have ended with terrain impacts when pilots lost their awareness of the surroundings.

While SV systems are constantly improving in the way that they show texture to help with depth perception, the texture is all artificial, and doesn’t represent what is really out there. The FLIR, on the other hand, is showing real fields, streams, property boundaries—things that we consciously use to help determine distance and height. If you are flying in familiar territory, the FLIR can very well show you the same landmarks that you are used to using to judge your position relative to a home or neighboring airport—or the traffic pattern.

We were curious about the FLIR’s ability to show towers and wires, and my initial impression is that you shouldn’t count on this. We pointed the airplane at several large transmission towers, yet nothing showed up on the screen, possibly because they simply weren’t that thermally different than their surroundings, and possibly because we couldn’t let ourselves get close enough where they would stand out. But certainly in the near range, anything that has a thermal difference with its surroundings will be highlighted and stand out as an obstacle.

FLIR is becoming a more integral part of our world; we see it all the time on the television news as it is used by the military and police forces. Systems for business jets are showing up more and more often as well, possibly because they are more likely to use smaller airports in the middle of the night than the airlines would frequent. But those systems are quite expensive, as is anything for a certified business jet. Now that companies like GRT are exploring the market, expect to see the option available for the Experimental market, and more pilots choosing to incorporate the tool into their avionics suite. While not cheap, it can add a measure of safety to an aircraft that is used in bad weather and/or at night. Most Experimental aircraft are used much less seriously than that, but for those who want the extra assurance in the dark or in bad weather, a FLIR camera might just be the ticket. I’d keep my eye on this technology; the incremental cost to add it to an advanced airplane is not that high, and the results are quite astounding. Grand Rapids has always been a leader in Experimental EFIS development, and it is nice to see them working on yet another new technology that can add an increment of safety to our field.